Big Data, Pharma 4.0 and Legacy Products

Big data offers companies a unique opportunity to close the knowledge gap that exists for legacy products and processes. Companies that close this knowledge gap are better positioned to transform their manufacturing operations to take advantage of Industry 4.0.

Industry 4.0, it is said, will create “smart factories” and make systems more flexible to customize mass manufactured products—points reflective of pharmaceutical industry trends toward increased efficiency and personalized medicine (1–3). In these systems, information is shared by leveraging cloud-based systems to use key data for strategic decision-making and enable continuous improvement with a goal to move beyond compliance.

“Pharma 4.0,” a term used to describe Industry 4.0 specific to pharmaceutical manufacturing settings, underscores the need for pharmaceutical manufacturing to be driven by process requirements. A manufacturing plant set up according to Pharma 4.0 principles consists of machines, equipment and computers that constantly monitor every aspect of the process via multiple sensors, including their own wear and tear (1).

Yet before big data analytics can be deployed, companies need to close process knowledge gaps for legacy products and define how to balance analytics with subject matter expertise.

A recent study looked at how to improve process knowledge and set foundations for big data applications. This particular study can be extended to any portfolio with complex manufacturing processes commercialized over the last decades. The takeaways from this study are outlined below.

Building Blocks, Definitions and CQAs

First, a company must select a methodology to characterize legacy processes and determine sources of data from the ever-expanding options. This can be outlined in the project charter. Holistic and integrated use of data is essential for repeating the results of analysis, transferring information to other products and augmenting regulatory filings.

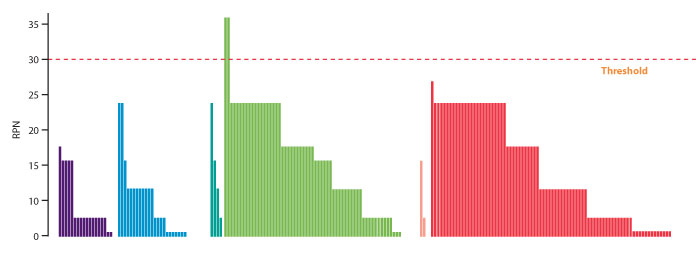

Next, the project team should identify material attributes, process parameters and other variables (e.g., mixing of batches, hold times) that may impact product critical quality attributes (CQAs) over the shelf life according to quality-by-design principles. Information can be extracted from the continued process verification (CPV) program, annual product reviews, investigations, risk assessments, batch records, and development reports. The initial cause-and-effects matrix (CEM) needs to be detailed thoroughly and a comprehensive risk assessment, using such recognized methods as failure mode effect analysis (FMEA) conducted per ICH Q8–Q9 (4,5). A review of quality deviations helps identify failure modes that could be otherwise missed. The FMEA points to areas with the highest potential for failure (Figure 1). Aggregated risk assessment results in Figure 1 show which unit operations have the most and highest-scored risks. The project team should set the threshold for the risk priority number (RPN) and aim to improve controls for these areas, bringing the RPN to an acceptable level.

The scope should aim to fill knowledge gaps exposed by the CEM and FMEA. For products with multistep, complex processes, the recommended strategy is to first characterize the critical and less understood steps (e.g., mixing or coating steps for dosages with controlled release dissolution profiles, pH adjustment for sterile liquids, excipient properties driving dissolution, etc.) and then expand to end-to-end process. Companies can then leverage CPV trends that provide quick indications of less robust process areas.

So Much Data to Collect

Raw material attributes and process parameters, including lags between unit operations and hold times, and in-process quality attributes need to be collected for as many batches as possible. Ideally, this information is collected directly from eq>uipment and transferred, without human assistance/intervention, into databases for analysis. Often, this information is scattered and not suitable for analysis. In such cases, software tools can be developed to assemble available data from disparate sources and structure it for the purpose of analytics. This initial effort should contribute to, and be followed by, establishment of an infrastructure composed of databases and automated or semi-automated analyses to ensure data quality and integrity.

Statistical Analysis: A Numbers Game

Statistical analysis advances process understanding and knowledge. Results from statistical analysis help to separate signals from noise, differentiate causality and correlation, identify trends and make comparisons.

Methodologies applied to dataset assessment include, but are not limited to, data distribution, control charts, correlation analysis and multivariate data analysis (MVDA) tools such as partial least squares modeling and principal component analysis (PCA). Some of these methods, notably, control charts and capability calculations, are now routinely used, but more sophisticated techniques are needed to dissect a large amount of data to gain new insights, process knowledge and perform predictive behavior modeling.

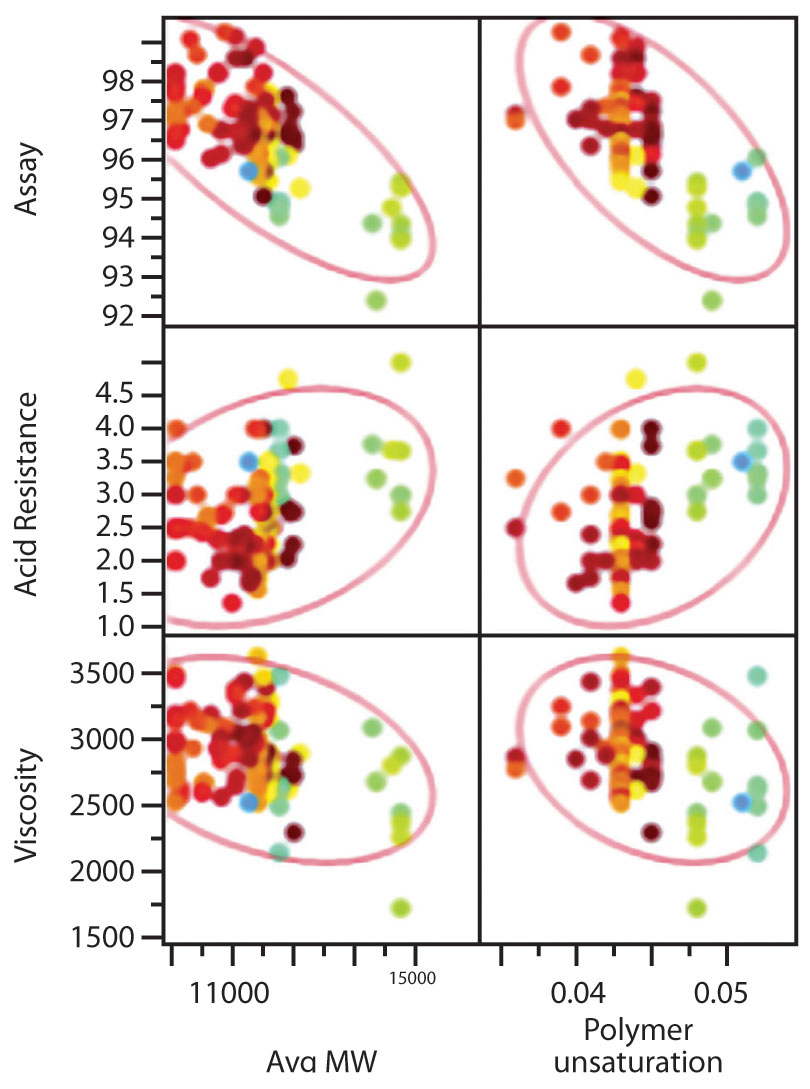

MVDA can be used to show how raw material attributes impact CQAs and disrupt process performance, as not all raw material variability is neutralized by processing. Often, specifications for raw materials are set too wide to reflect the impact on final product quality attributes, causing true causality to be derived from statistical analysis of a large dataset. The example in Figure 2 shows how attributes of dissolution rate controlling polymer significantly impact product assay and the data, colored by year of supply, show that raw material properties can be changed over time.

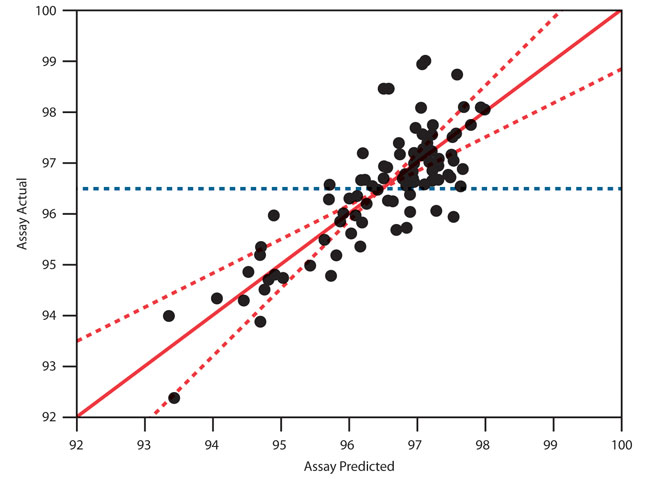

With this understanding, models can be constructed to predict CQA values for ranges of significant raw material attributes (Figure 3).

Modern pharmaceutical equipment includes sensors to collect a wide range of data to evaluate process dynamics. This is a bit of a double-edged sword as the increase in dimensions can complicate the analyses because many of the parameters, such as temperatures, measures of moisture and pressure, will be colinear and some of them will move in the same (or opposite) directions at the same time.

MVDA methods such as PCA or effect leverage plots are sophisticated methods that are made accessible to subject matter experts thanks to graphical and interactive software like JMP. These models identify primary control variables and changes in methods and materials that promise to reduce common cause variation. Low p-values (see the right-most column in Figure 3) indicate parameters that influence one or more outcomes. The p-values themselves are not always understood but, the horizontal bars to the left of that column convey the importance of each factor examined in the study. On the far left, the effect leverage plot, with points following the solid diagonal line, indicates that the model overall satisfies statistical assumptions. Good model fit alleviates the risk of overgeneralizing based on a few observations. Graphics allow subject matter experts to draw correct conclusions from the data.

Data analysis results should identify areas of product control strategy that need adjusting and show material attributes and process parameters are normal and variability is well controlled. End-toend analysis should also provide additional insights into the overall process performance such as recommendations to perform periodic PCA on raw material Data analysis results should identify areas of product control strategy that need adjusting and show material attributes and process parameters are normal and variability is well controlled. End-toend analysis should also provide additional insights into the overall process performance such as recommendations to perform periodic PCA on raw material attributes to detect shifts in a process. This cannot be seen in univariate trending. As a plus, improvements to the control strategy will provide direction for monitoring sensor placement for future automation.

After data from critical steps or the entire process have been analyzed, the team revises the CEM and FMEA with results from the MVDA study, including scientific knowledge used to interpret results. CEM with causality justification and FMEA with new scores capture knowledge gained and create a new baseline. The effectiveness of the results should also be evaluated periodically.

To maintain momentum, automating and repeating the analyses for other products as soon as possible is recommended. Companies can build a manufacturing information model for each technology platform to expedite results.

The Transition to Pharma 4.0

Among the many challenges to adopting Pharma 4.0 is a need to house and process a rapidly growing volume of data (e.g., an online tablet inspection system generates up to 24 terabytes annually). Companies must be prepared to properly and efficiently analyze this data on a regular basis. Tools such as JMP, SAS and others allow subject matter experts to conduct advanced statistical analysis by way of visualization and interactivity.

Pharma 4.0 requires analyzing process data in real time or near-real time to allow staff to respond to signals and make decisions. Here, too, software will help but it is critical to avoid automating tampering, i.e., adjusting common cause variations, as this breeds process instability. Issues generally arise in the form of special-cause variation which will be the target for realtime analytical systems.

Real-time analytical activities characterize working in the system where operations are adjusted to best ensure quality. Analytical systems put in place to assist working in the system should also help in reducing common-cause variation. This means optimizing methods, materials, tooling and equipment. A design of experiments method provides the efficiency of simultaneously examining multiple factors in a brief period.

When organizations achieve a similar level of product and process knowledge for their entire portfolio, regardless of product time on the market, they are expected to find it easier to take advantage of new manufacturing technologies.

Beyond the need for the pharmaceutical industry to remain globally competitive (capacity, cost, agility) is increasing push by regulators for continuous product monitoring and process understanding (1). There is a growing expectation that manufacturers will perform such reviews much more frequently than annually. Pharma 4.0 technology allows for continuous, real-time monitoring of manufacturing processes, enabled by big data.

[Editor’s Note: online version includes additional figures and text along with an expanded version of Figure 3.]

References

- Schoerie, T. “Pharma 4.0 – How Industry 4.0 impacts in Pharma.” PharmOut Blog https://www.pharmout.net/pharma-4-0/ (accessed Sept. 13, 2018)

- Markarian, J. “Pharma 4.0.” Pharmaceutical Technology (2018): 24 www.pharmtech.com/pharma-40 (accessed Sept. 16, 2018)

- Wright, I. “What is Industry 4.0 Anyway?” Engineering.com (Feb. 22, 2018) www.engineering.com/AdvancedManufacturing/ArticleID/16521/What-Is-Industry-40-Anyway.aspx (accessed Sept. 16, 2018)

- ICH Harmonized Tripartite Guideline: Pharmaceutical Development, Q8 (R2): Pharmaceutical Development

- ICH Harmonized Tripartite Guideline: Quality Risk Management, Q9: Quality Risk Management